Mercedes to accept legal responsibility for accidents involving self-driving cars

Carmakers must educate customers on their responsibility to take over within a few seconds, say experts

Mercedes has announced that it will take legal responsibility for any crashes that occur while its self-driving systems are engaged.

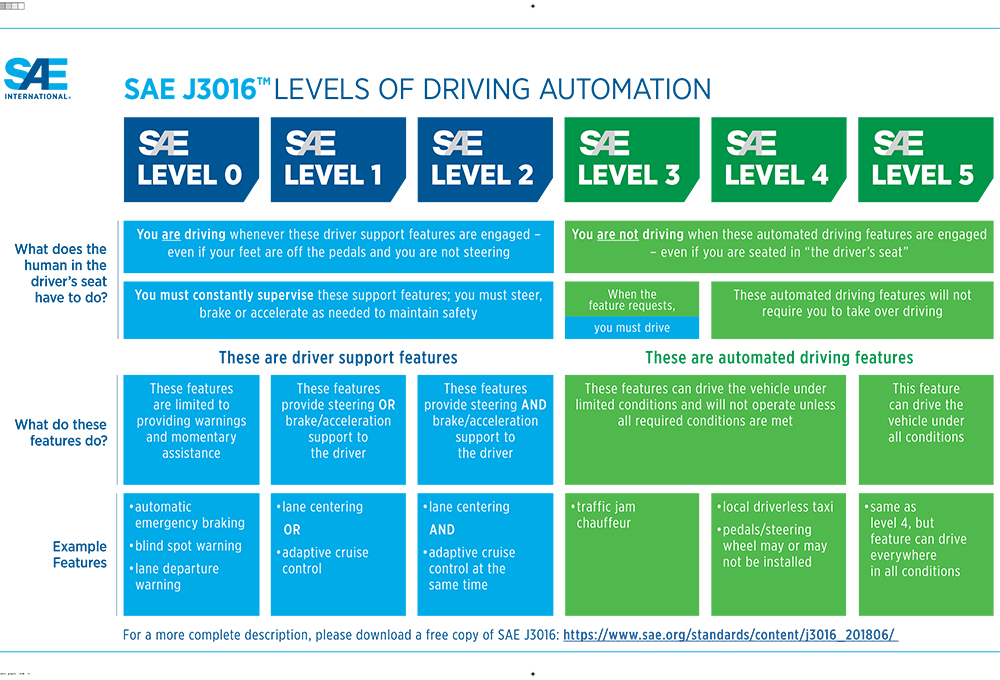

The company is currently in the process of deploying “Drive Pilot” technology for its new S-Class and EQS saloon models, which is “Level 3” for autonomy on a six-tier system devised by Society of Automotive Engineers, ranging from Level 0 (no automated driver assistance) to Level 5 (the car drives itself everywhere without any input from the vehicle occupants).

Level 3 autonomy means that drivers may take their hands off the wheel and undertake other tasks, such as reading a book, while the car assumes full control of all driving functions. However, this is only in specific conditions, such as in low-speed traffic on motorways, and the person in the driver’s seat must be able to retake control within a few seconds of an alert from the car.

This is a big leap from Level 2 autonomy, which requires hands-on-wheel supervision from the driver at all times, and which is currently commonplace on new cars in the form of adaptive cruise control and automated lane-keeping.

Some cars from the likes of Audi, Mercedes, BMW, Genesis and Tesla have such advanced systems that they are considered somewhere between Levels 2 and 3 — dubbed by experts as Level 2+. However, it is not legal to introduce Level 3 autonomy on production cars in many territories at present.

The UK government said last year that a Level 3 technology called automatic lane-keeping systems (ALKS) will permit cars to travel at up to 37mph on motorways, though a change to the Highway Code is needed before it becomes legal. In December last year, What Car? reported that the change may come in “early 2022”.

How Mercedes’ Drive Pilot will work

The introduction of ALKS in the UK would open up the way for Mercedes’ Drive Pilot to be switched on, pending type approval. Once that happens, Mercedes has said it will accept full responsibility for accidents caused by faults with the technology, though not by a driver’s failure to comply with their duty of care.

In the event of a disengagement that may occur when the car encounters road works, tunnels or inclement weather, the Mercedes Drive Pilot system will give the driver a 10-second warning allowing them to assume control, at which point Mercedes’ legal responsibility in the event of a crash ends. Neither will the company be liable for any crashes that occur should the driver fail to take over in time.

As the “user in charge” rather than the “driver” while the vehicle is in self-driving mode, the occupant will not be legally liable for offences such as speeding or dangerous driving.

What happens if an accident is detected while the car is driving is another matter. In 2016, Mercedes’ head of driver assistance system admitted that if a Mercedes driving autonomously detected an accident, it would attempt to save the occupant at all costs, even at the expense of pedestrians.

Christoph von Hugo said: “If you know you can save at least one person, at least save that one. Save the one in the car,” adding, “If all you know for sure is that one death can be prevented, then that’s your first priority.”

However, Level 3 autonomy is unlikely to need to make life-or-death decisions due to the relatively low speeds and the types of roads on which it will be deployable.

Thus far, Mercedes’ self-driving system can only used on autobahns in Germany that have been specifically mapped by the carmaker, as well as some roads in Nevada and California in the United States. In those territories it only operate at speeds of up to around 37mph — matching the forthcoming UK regulations.

Drivers must be educated about liability

Mercedes’ announcement follows the publication of a joint report in January by the Law Commissions of England, Wales and Scotland that called for law reforms to exempt owners of self-driving cars from legal responsibility should a crash occur due to a failure of autonomous driving systems.

“The issue of liability in automated vehicles is complex and nuanced,” said Matthew Avery, chief research strategy officer at Thatcham Research, which consulted on the report. “It’s too crude to suggest that the carmaker should be liable in all circumstances; there will be times when an accident is and isn’t the carmaker’s responsibility.

“What is apparent in the case of Mercedes, the first to have approval – albeit in Germany – for technology that will allow drivers to disengage and do other things, is that when the automated system is in control, the carmaker will be liable.

“What’s less straightforward is an accident that occurs when the driver has failed ‘to comply with their duty of care’, for example when refusing to retake control of the car when prompted.”

Avery said it will be the responsibility of carmakers to ensure British drivers of their cars are “confident, comfortable and have a strong grasp of their legal responsibilities” in accordance with the Road Traffic Act.

“Absolute clarity is required for drivers in terms of their legal obligations behind the wheel and their understanding of how the system operates, especially during a handover from system to driver.”

He suggested that drivers who have become engrossed in other tasks for extended periods may take a long time to regain control when prompted by the car — something he called “coming back into the loop”.

“Insurance claims will require scrutiny, so the provision of data to help insurers understand who was in control of the vehicle at the time of an accident, system or driver, will also be vital,” he said.

“Trust will diminish if confusion reigns and drawn-out legal cases become common, hampering adoption of the technology and the realisation of its many societal benefits.”

Avery believes that a rating system will help motorists know which systems are the best, and therefore which ones they can trust.

“Fostering consumer confidence and trust in the first iterations of automated driving is paramount. This is where independent consumer rating will have an important role to play in driving safe adoption by making people aware of systems that are not as good as others.”

Legally speaking, under new tougher rules on mobile phone use behind the wheel, the “user in charge” of an autonomous vehicle will not be permitted to use their phone behind the wheel unless for navigation purposes when the phone is secured in a cradle. However, it’s likely the revised Highway Code will be amended again alongside the introduction of ALKS.

At present, drivers caught using a phone behind the wheel face a £200 fine and six penalty points, though a ban and a fine of up to £1,000 is possible.

Mercedes is the second manufacturer to receive legal approval in a certain territory for the use of a Level 3 autonomous driving system, following the approval in Japan in 2020 of Honda’s Traffic Jam Pilot on Honda Legend models.

Audi’s A8 is said to have Level 3-capable technology though it has not been deployed to production models yet. In April, BMW will launch its next generation of 7 Series and the electric i7 equivalent, both of which will be equipped with Level 3 technology.

Related articles

- If you found Mercedes to accept legal responsibility for accidents involving self-driving cars interesting, you will want to read that the fuel duty cut makes road pricing ‘more urgent’

- Also check out our guide to synthetic e-fuels

- Huge spike in demand for electric cars and bikes as fuel shortage crisis bites

Latest articles

- Five best electric cars to buy in 2025

- Should I buy a diesel car in 2025?

- F1 2025 calendar and race reports: The new Formula One season as it happens

- Zeekr 7X AWD 2025 review: A fast, spacious and high tech premium SUV — but someone call the chassis chief

- Denza Z9GT 2025 review: Flawed but sleek 1,062bhp shooting brake from BYD’s luxury arm